SEMrush Onpage Site Audit

In this video tutorial you will learn how to use SEMrush’s Site Audit Tool in order to improve the onpage SEO of your website.

Onpage SEO Scores

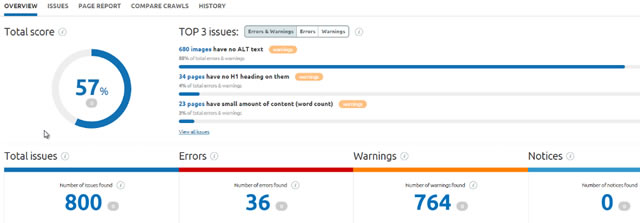

In our example, I ran the site audit tool on marquisyachts.com and it reported back over 800 issues and a score of 57%. SEMrush has 3 different categories of issues. Errors, warnings and notices with errors being the most critical to your website.

SEMrush gives your website a score, but I recommend looking at the different errors, warnings and notices individually to see whether or not they are harming your SEO.

Errors

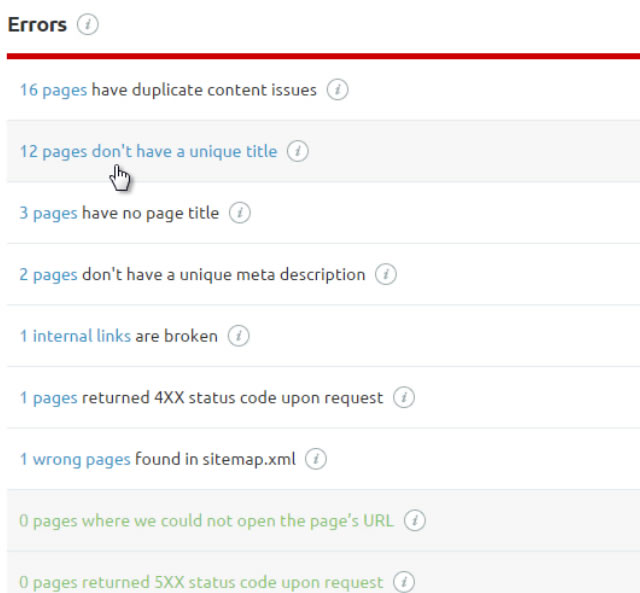

Click on the issues tab and this list displays all of the errors, warnings and notices. Click on the errors tab to show all of the errors. The tool indicates pages with duplicate content, pages without a unique title tag, pages that have no title tag, pages that do not have a unique meta description, broken internal links and 400 error code status. This is typically a 404 error page not found and finally wrong pages found in the sitemap.

One nice feature is it displays the full list of errors even if your website has 0 occurrences for a specific error.

Looking at the XML sitemap, this page has a 404 error and should not be listed in the XML sitemap. The correct action is to remove this page from the sitemap or to create the page. The page may have moved and in that instance a 301 redirect should be created.

The broken 404 is the same page with the broken internal link from the homepage. This one page causes multiple errors.

Similar Content

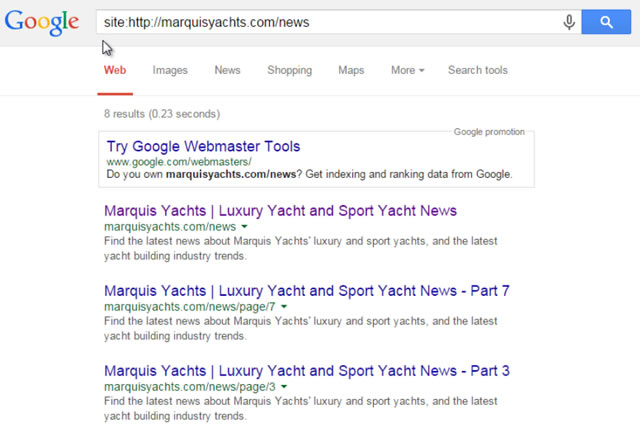

2 pages do not have unique meta descriptions. A site: search in Google reveals that even more pages have similar meta description issues. Each of the different subpages has the same meta description, “Find the latest news about Marquis Yachts.” This may or may not be an issue that requires action. Adding the noindex tag to the archive pages will prevent duplicate content issues in Google and eliminate duplicate meta descriptions at the same time.

For example, this page will have overlapping content with this innerpage. You can see the text “The inland seas region of the United States” appears on both pages.

To prevent this from happening, write unique excerpts. This is particularly important for anyone blogging. One thing this WordPress theme does well is, if no excerpt is present it only shows a summary of the post instead of displaying the entire post of the archive page.

This Site Audit Tool can help find pages that have been forgotten about or abandoned. These 3 pages do not have a page title. Upon further investigation, the title tag matches the URL. This is an opportunity to create unique title tags for each of the pages.

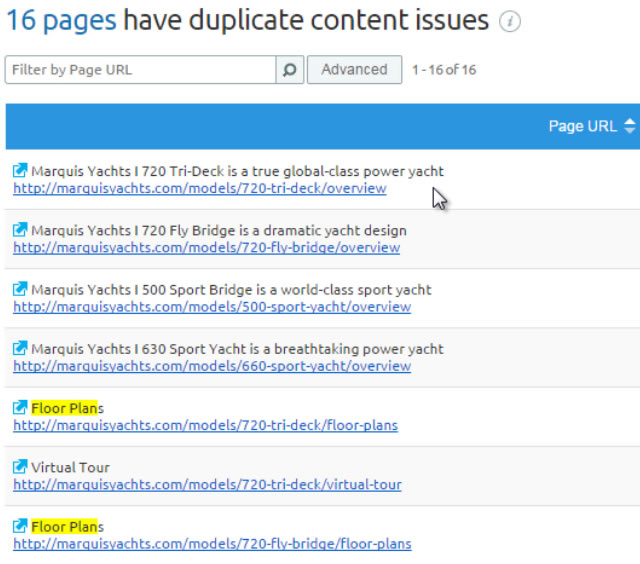

12 pages do not have unique titles. Multiple pages share the same title tag Floor Plans. However, one page is for the Model 500 and the other for the Model 720. This is an easy fix, just update the title tag to include the model number. As a tip, adjust the number of issues that you display per page by using the drop down option.

Duplicate Content

The biggest overall issue for this website is duplicate content. 16 pages have errors. If you are unsure about which pages are duplicates, use the similar page check option. Based on the matching page titles, SEMrush marked these pages as duplicates. All of them have image gallery as the title. On each page, the only thing that changes is small portion of text and the rest of the page does not appear to load. This hurts the user experience and Google might penalize sites with too many of these errors.

Four of the floor plans pages appear to be duplicates. The loading error may be caused by browser compatibility issues and should be investigated further. If everything worked correctly, these pages are probably not duplicates. Using the SEMrush Site Audit Tool, we were able to identify multiple errors requiring attention.

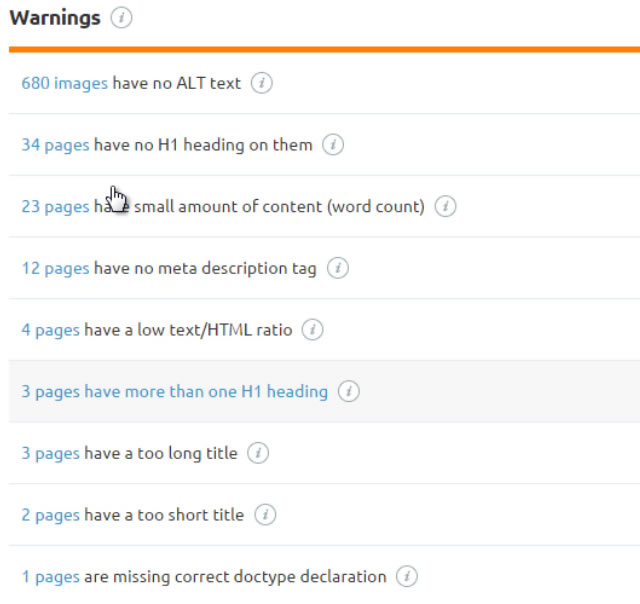

Warnings

Next, go to the warnings tab to see what issues can be resolved. The website does well on a number of checks; however there are some outstanding warning messages.

There is no link to the XML sitemap found in the robots.txt file. The robots.txt file contains a few instructions. However, some additional commands to tell robots where the sitemap is can improve crawling and indexing. Adding more Disallow:/ tag commands can help prevent robots from indexing unwanted subfolders.

The next two warnings are related to the news feed page. The news feed does not contain the correct language declaration and document declaration. For example, declaring whether this page is an XML or HTML file and the language, like English is needed to correct this warning.

2 pages have too short of title tags. Sitemap and brochure. In this instance, no changes are required, but the brand name could be added to the end of the title tag to remove this warning.

3 pages have too long of title tags. You can see these titles tags will be automatically shorted by Google or Bing. This issue is not critical, but removing Marquis Yachts from the beginning of each title tag can fix the warning.

Thin Content

4 pages have a low text to HTML ratio. This means there is a high percentage of code on the page relative to the visible text. These four pages have little or no content. This is the main /video page and has no content. This page is a simple map, which needs no changes. However, there are two more dynamically generated video pages that are duplicates of the /video page. The best option is to remove these 2 dynamic pages from the index and upload the video to the /video page.

12 pages do not have a meta description. The sitemap, news page and some of the pages examined have this warning. Evaluate on a case by case basis to assess if it is worth taking the time to add a meta description. Most search engines automatically generate one, but unique descriptions can increase clicks to your website. Alternatively, having to create thousands of descriptions on an ecommerce website probably is not the highest and best use of your time.

Most of these pages you probably do not intend to rank in Google. The best practice is to create a unique meta description for each page. For example, for the brochure page a meta description might read, “Want more information, request a brochure featuring all of our different yacht models.”

23 pages have a low word count. Here are 3 different examples. Boat show registration. This page has little or no text and is a page that can be removed from the index because pre-registration has closed.

Owner’s manual is simply a drop down option where a customer can select from the different yacht models and download the brochure. There are no big changes that need to be made to this page.

Finally, the last page contains a PDF to download and some text. The content is thin. Use your best judgement to determine if a page should be kept in the index. I believe this page has value to visitors, but should have some more content added to it.

Be sure to check if the content is being duplicated on other pages. For example, pages with one image are often part of a gallery, or a PDF document page may belong to a library featuring the same PDF and other related documents. If this occurs, the thin content page should be removed from the index.

Missing Data

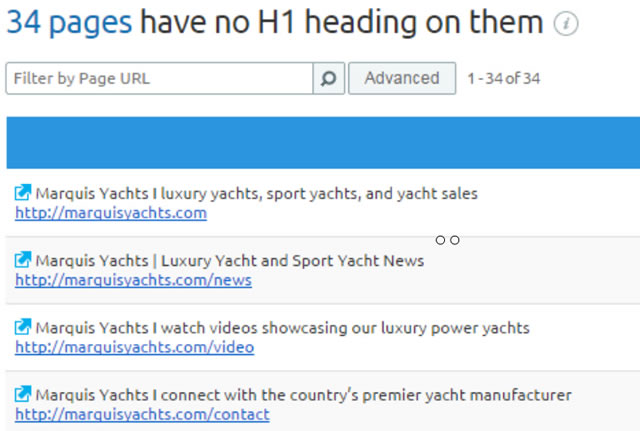

34 pages do not have an H1 heading tag. This is a matter of preference. Some SEOs prefer H1 tags, others like H2 and H3 tags and some do not use heading tags at all. The most important thing to consider is how it affects the user experience.

Be sure to avoid overoptimization by having matching header tags and title tags. For example the title tag, What is a Yacht? Then the H1 tag What is the best yacht? and then an H2 tag, What is a sport yacht? Use synonyms instead.

Finally, 680 images do not have ALT text. This is an easy fix, but remember to avoid overoptimization and simply describe what the image is about. Do not keyword stuff. The source code shows some of the images do contain alt text, and others do not.

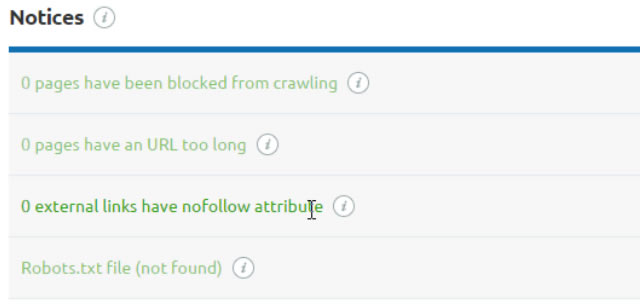

Notices

Now go to the Notices tab, It shows Marquis Yachts has 0 notices that need to be fixed. The website has a robots.txt file. 0 External links have the no follow attribute, however in many instances, like banner advertisements, the external links should have the no follow tag. Evaluate on a case by case basis.

No pages have a URL too long. This is rarely an issue for non-ecommerce websites. Ecommerce websites have a tendency to generate dynamic pages with long URLs.

0 pages have been blocked from robots for crawling. A notice will appear if you disallow any pages or folders in robots.txt. This is standard practice.

After watching this video you should know how to use the SEMrush Site Audit Tool and how to determine whether or not action is required based on the different errors, warning and notices. This is just one way you can improve the onpage SEO of your website.

In the next video, you will learn how to perform a Competitive Site Audit to analyze which competitors have strong and weak onpage SEO relative to your website.